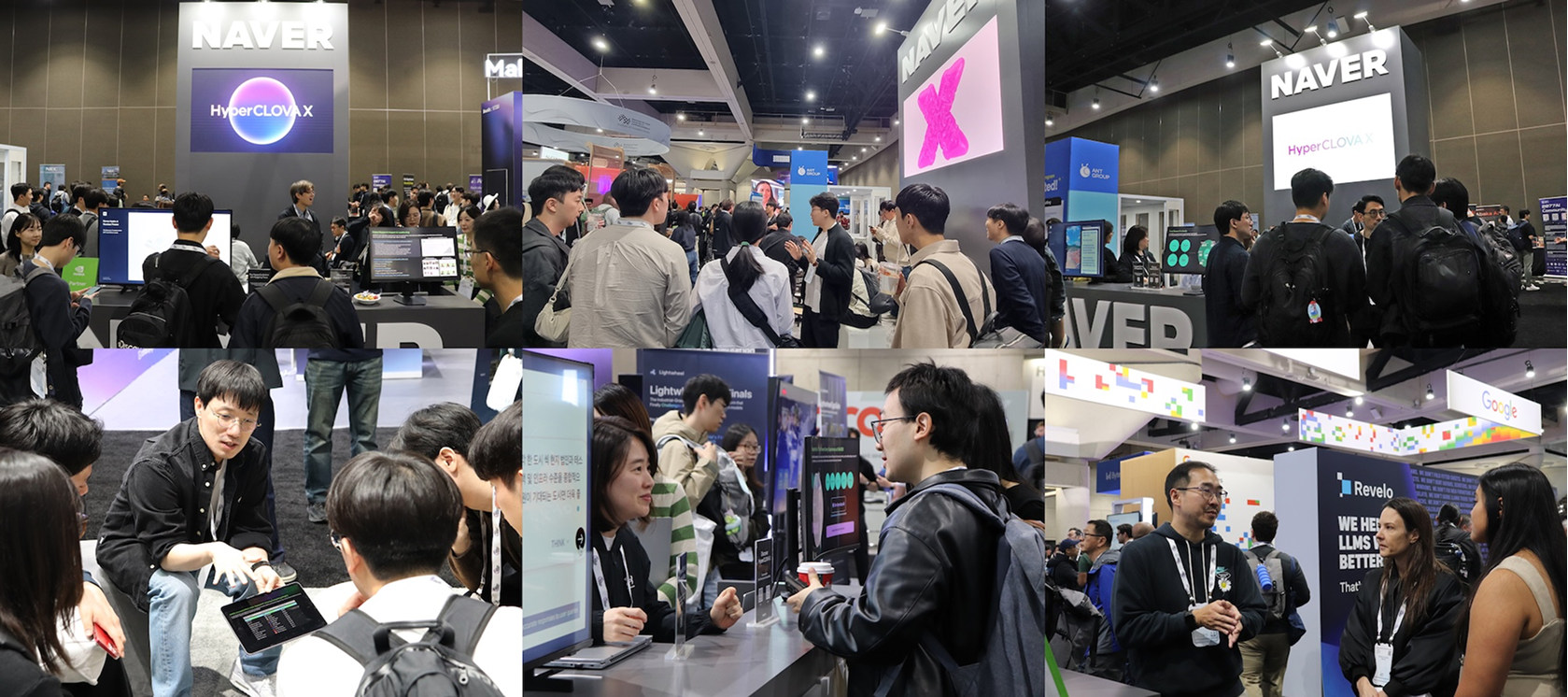

In early December, NeurIPS 2025—the world’s largest AI and machine learning conference—took place in San Diego. Researchers, universities, and companies from around the globe gathered to discuss the future of AI, and NAVER was there as a key participant.

At our booth, we presented 9 research papers and showcased demos of our latest technologies, alongside networking programs. In this post, we share the global trends we observed firsthand, the significance of our research within the field, and the possibilities we glimpsed at the event.

Experiencing AI’s present in San Diego

With over 29,000 attendees—a record high—this year’s NeurIPS was bustling from day one. From the latest LLMs to robotics and multimodal models, new research and experimental findings emerged from every corner, and discussions ran nonstop through talk sessions and poster presentations.

From research to reality

NAVER’s booth drew considerable attention in this atmosphere. Our work on running efficient hyperscale models, controlling and ensuring the safety of generative AI, and applying AI in the real world resonated with global researchers.

Attendees interacted with our demos and discussed the technologies covered in our papers. Many also expressed strong interest in how this research has shaped NAVER’s services and industry solutions. Recruiting consultations were equally active, leading to meaningful conversations with a wide pool of talented individuals.

One thing we heard repeatedly was: “The strategy of pursuing both fundamental research and industry technologies at the same time stood out.” This validated NAVER’s approach to AI research—and reaffirmed our commitment to it.

NAVER’s 2025 AI research themes: Efficiency, control, and real-world AI

The question we heard most often was: “What kind of AI does NAVER want to build?“

Our research contains the answer.

Here, we present the 9 papers accepted at NeurIPS 2025—centered on efficiency, control, and real-world AI—that speak directly to this question.

Part 1. Smaller but faster: Efficient LLM and infrastructure

KVzip: KV cache compression—keeping only what matters to boost efficiency

The longer a conversation gets, the more context a model needs to retain—as memory usage grows, costs rise and speed drops.

KVzip approaches this challenge from a fresh angle. Think of it as organizing a cluttered desk: through simple compression, it keeps only the essential information. The model preserves important context while operating far more lightly, with memory savings of up to 4 times and speed improvements of nearly 2 times. The result is faster, more natural conversations.

To learn more: KVzip: Query-Agnostic KV Cache Compression with Context Reconstruction

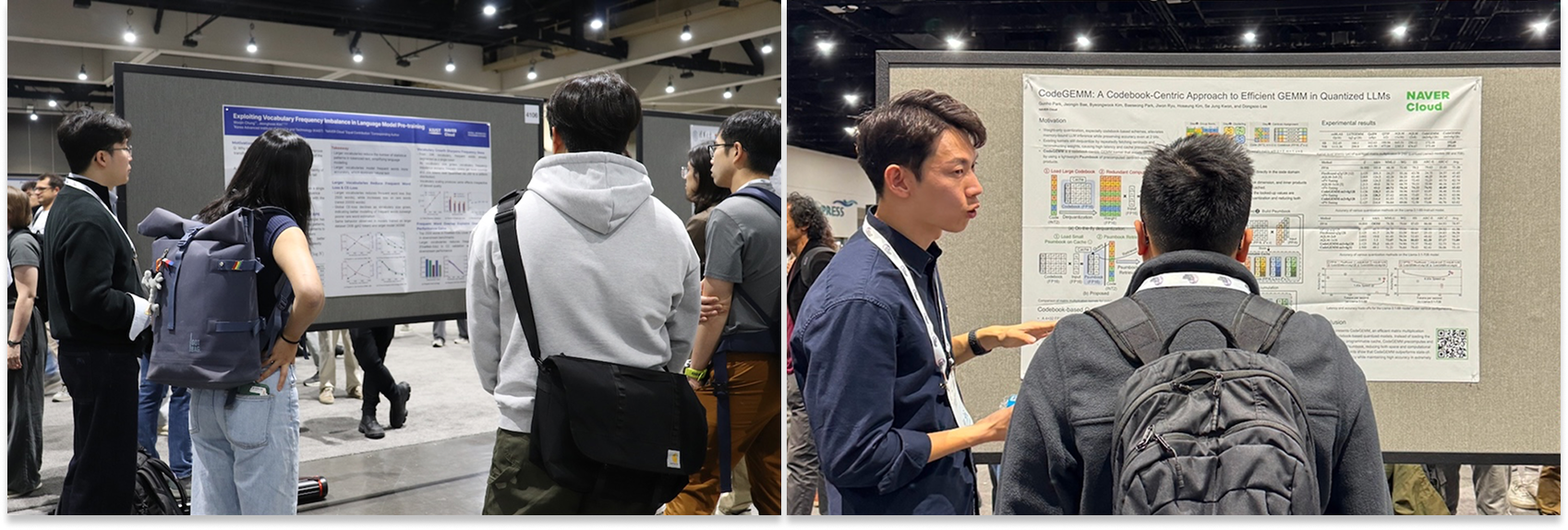

CodeGEMM: Fast computation without dequantization using an extremely low-bit LLM kernel

Quantized models are lightweight because they represent numbers in simplified form, but this typically requires an inconvenient step: restoring values to their original precision before computation. CodeGEMM skips this recovery process entirely, precomputing the necessary operations and assembling them during inference.

This means the model no longer repeats heavy computations each time. Even hyperscale models like 70B can run up to 8.93 times faster—a key technology for delivering the same AI capabilities with less energy and greater speed.

To learn more: CodeGEMM: A Codebook-Centric Approach to Efficient GEMM in Quantized LLMs

Vocabulary frequency: Analyzing language model efficiency through vocabulary distribution

Language models understand sentences by breaking them into small pieces called tokens. Recently, researchers observed that tokenizers with larger vocabularies showed better performance—but why?

Our research team found the answer through a simple observation: if a model can recognize frequently used words in a single token, it needs to memorize far fewer rules. In other words, the larger a model’s vocabulary, the more efficiently it can process common words, allowing it to learn the rules of language much faster. This research is the first to prove this relationship through both theoretical analysis and experimentation, offering insights that could serve as an important guideline for tokenizer design going forward.

To learn more: Exploiting Vocabulary Frequency Imbalance in Language Model Pre-training

Frequency-aware token reduction: Keeping only key information while reducing ViT tokens

Vision Transformers (ViT) deliver excellent performance in image processing, but analyzing every piece of an image leads to significant computational costs.

This research introduces a human-like approach to visual attention—distinguishing what to examine closely from what to skim. The model retains parts of an image with significant changes or high importance while condensing less critical regions into a single summary token. As a result, models can perform far fewer computations without compromising—or even while improving—their performance. It’s a practical solution for building smarter vision models at lower cost.

To learn more: Frequency-Aware Token Reduction for Efficient Vision Transformer

Part 2. Safer and more controllable: Controllability and safety

C-SEO Bench: Validating SEO effectiveness in conversational search environments

As conversational search gains momentum, many companies are asking: “How can we make AI surface our content more effectively?”—a challenge known as Conversational SEO (C-SEO). Until now, however, there has been no objective evaluation method to determine whether such strategies actually work.

In this research, we propose C-SEO Bench, a new benchmark that measures effectiveness across various tasks and domains. Our experiments revealed that many of the latest C-SEO methods fail to work as expected—some even underperform compared to traditional SEO approaches.

Our research team has released the datasets, code, and benchmarks as open source for further research. Details are available in the paper.

To learn more: C-SEO Bench: Does Conversational SEO Work?

DATE: Text embedding that dynamically adapts to the generation process

Previous image generation models incorporate text descriptions only once, making it difficult to follow user intent closely when details shift even slightly during generation.

Diffusion Adaptive Text Embedding (DATE) overcomes this limitation by re-reading and updating the text at every step of the generation process. Using intermediate data from each stage, it checks whether the current image matches the text well, then progressively refines the embeddings according to optimization rules.

This allows the model to interpret user intent much more naturally and accurately—particularly in complex scenes with multiple subjects or in text-based editing tasks. A major advantage is that this adaptability requires no additional training.

To learn more: Diffusion Adaptive Text Embedding for Text-to-Image Diffusion Models

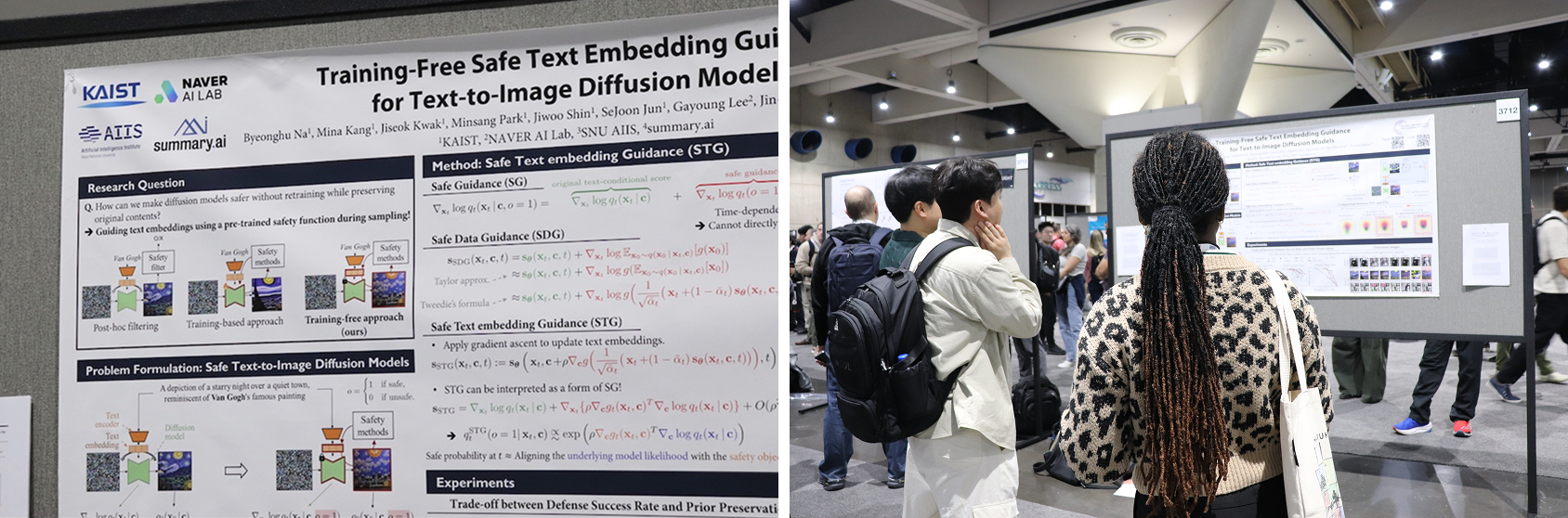

Training-free safe guidance: Sampling guidance that enhances safety without retraining

As image generation models have improved dramatically, so has the risk of generating unintended harmful content. Previously, retraining was the common approach to improving safety, but it was costly, time-consuming, and difficult to apply in real-world settings.

Safe Text Embedding Guidance (STG) offers a new solution that requires no additional training. During generation, the model evaluates danger signals based on the expected final denoised images and adjusts text embeddings accordingly, guiding it toward safer outputs.

This method aligns the model’s distribution naturally with safety constraints while preserving the original prompt’s meaning. In our experiments, it reduced various risk factors—explicit content, violence, and art style imitation—while generating safer images than existing methods. The ability to deploy this without retraining is a significant advantage for production environments.

To learn more: Training-Free Safe Text Embedding Guidance for Text-to-Image Diffusion Models

Part 3. Physical AI: Expanding into the real world

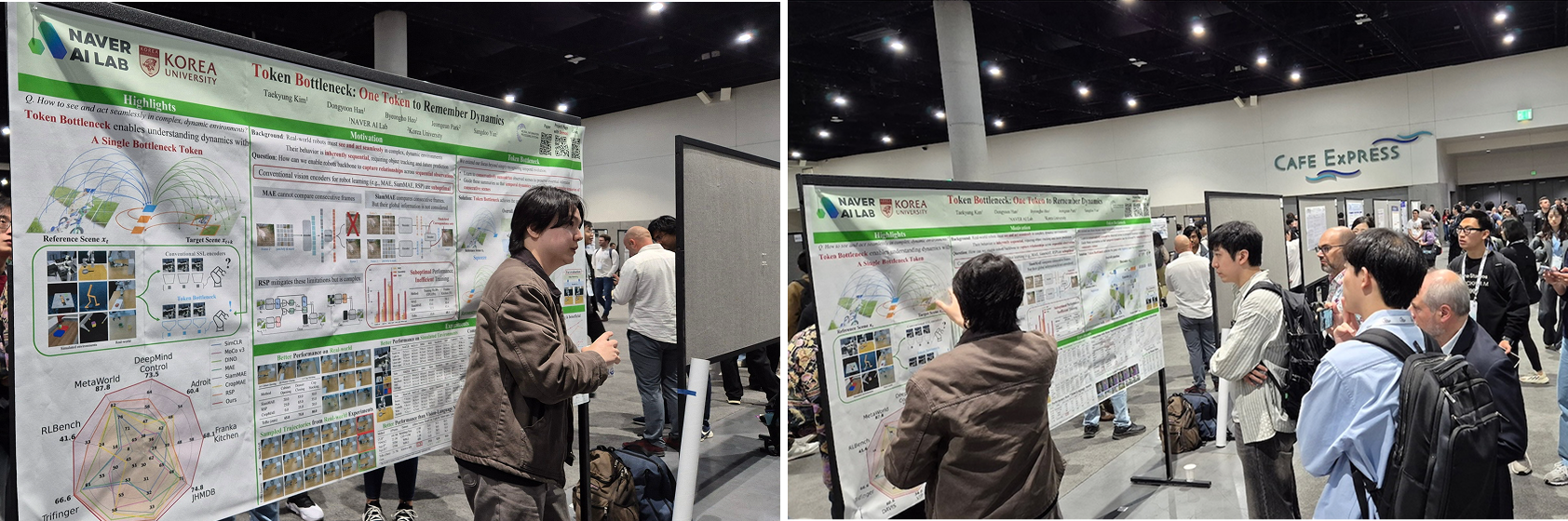

ToBo: Dynamic representation training that summarizes scene changes with a single token

Scenes in videos or robot movements change continuously over time, but most models try to remember everything frame by frame, leading to inefficiency. Token Bottleneck (ToBo) takes an entirely different approach.

The learning pipeline compresses a scene’s essential information into a single bottleneck token (squeezing), then predict the subsequent scene using this token and minimal patches as hints (expansion). Through this process, the model naturally learns to understand temporal dynamics—all through self-supervised learning without labels.

In our experiments, ToBo demonstrated more reliable and consistent performance improvements across diverse tasks—including video label propagation and robot manipulation in simulated environments. It’s a practical approach that captures movement dynamics deeply with minimal information.

To learn more: Token Bottleneck: One Token to Remember Dynamics

Kinaema: A recurrent model that tracks memory and location through movement

In environments where robots move continuously, one of their key capabilities is locating themselves and remembering which paths they’ve taken.

Kinaema addresses this challenge with a recurrent sequence model for memory and pose that remains reliable over time. Even as scenes change, it stores and accumulates the necessary information, then uses it to determine the next movement—allowing robots to navigate more accurately with less computation.

In our experiments, robots operated reliably not only in simulations but also in real-world environments, proving it can navigate changing spaces without losing its way.

To learn more: Kinaema: a recurrent sequence model for memory and pose in motion

Back in Korea: Preparing for the next chapter

Our time at NeurIPS 2025 was more than an exhibition—it was a chance to showcase NAVER’s AI vision to a global audience.

From efficient hyperscale models to safe and controllable generative AI to real-world AI that extends into the physical world, we demonstrated both the practicality and potential of our research through sessions and demos. At the same time, active discussions with researchers worldwide sparked new ideas, raised new questions, and helped us sharpen our technology philosophy even further.

Now back from San Diego, we’re at the research table once again—turning this experience into another step toward innovation. We hope you’ll follow along as we pursue more meaningful, more practical, and safer AI in the years ahead.