As AI technology becomes increasingly integrated into our workflows, organizations need more than just demos—they need generative AI adoption strategies that can scale to production. Recognizing this critical need, NAVER Cloud hosted AI Dev Day on July 15 in Seolleung, Seoul, bringing together over 190 AI developers and engineers from across the industry.

The conference served as a vital platform for sharing practical insights on implementing generative AI in real-world services. Sessions covered everything from current LLM technology trends to multi-agent architectures, audio-specialized models, and implementation strategies using Model Context Protocol (MCP). The event struck an ideal balance between practical application and technical depth, with participants praising the “actionable content they could apply to work immediately.”

In this post, we’ll walk you through all the sessions from AI Dev Day, covering the comprehensive range of generative AI strategies and architectural insights that can help you maximize the impact of AI in your own projects.

Exploring LLM trends and real-world applications

Kang Jina, NAVER Cloud technical evangelism team

The technology landscape around large language models (LLMs) is evolving rapidly. Three key trends are driving this transformation:

- Expansion into the physical world

- Rise of agentic AI

- Importance of interoperability

Let’s explore how these trends are shaping the future of AI technology and services.

1. LLMs moving beyond digital to real-world applications

LLMs are no longer confined to digital screens—we’re entering a stage where they extend beyond digital interfaces into the physical world. OpenAI announced plans to release its own AI devices in 2026 (read more), and Google is preparing to create a Gemini-powered hardware platform including Pixel smartphones and smart glasses (read more).

These developments signal an accelerating transformation toward an AI ecosystem centered on physical hardware. LLMs are no longer limited to web browsers or mobile apps. As they integrate with devices, sensors, and real-world data sources, they’ve evolved from simple data processing tools into systems that can understand and interact with the physical world in real time.

2. The rise of agentic AI that can think and act autonomously

Another significant technological trend is agentic AI—systems that can think and act independently. While LLMs traditionally respond to instructions, they’re now developing the ability to make plans, solve problems, and perform tasks proactively.

Today’s LLMs can autonomously handle complex workflows: searching the web, summarizing materials, writing responses, reviewing outputs, and delivering final results—all without human intervention at each step.

NAVER’s recent inference model, HyperCLOVA X THINK, exemplifies this agentic AI approach. This open-source model can adapt conversations flexibly to various situations and enables proactive language-based interactions (try it on Hugging Face).

Agentic AI is finding practical applications across industries:

- Security: Detecting potential threats and automatically generating response protocols

- Supply Chain Management (SCM): Performing comprehensive analysis including inventory, market trends, and external metrics to predict demand

- Customer Relationship Management (CRM): Providing behavior-based personalized recommendations and automated responses

By incorporating retrieval-augmented generation (RAG), multi-agent frameworks, and other advanced methods, AI agents are becoming increasingly sophisticated and autonomous systems.

3. Interoperability: Connection and collaboration in the AI era

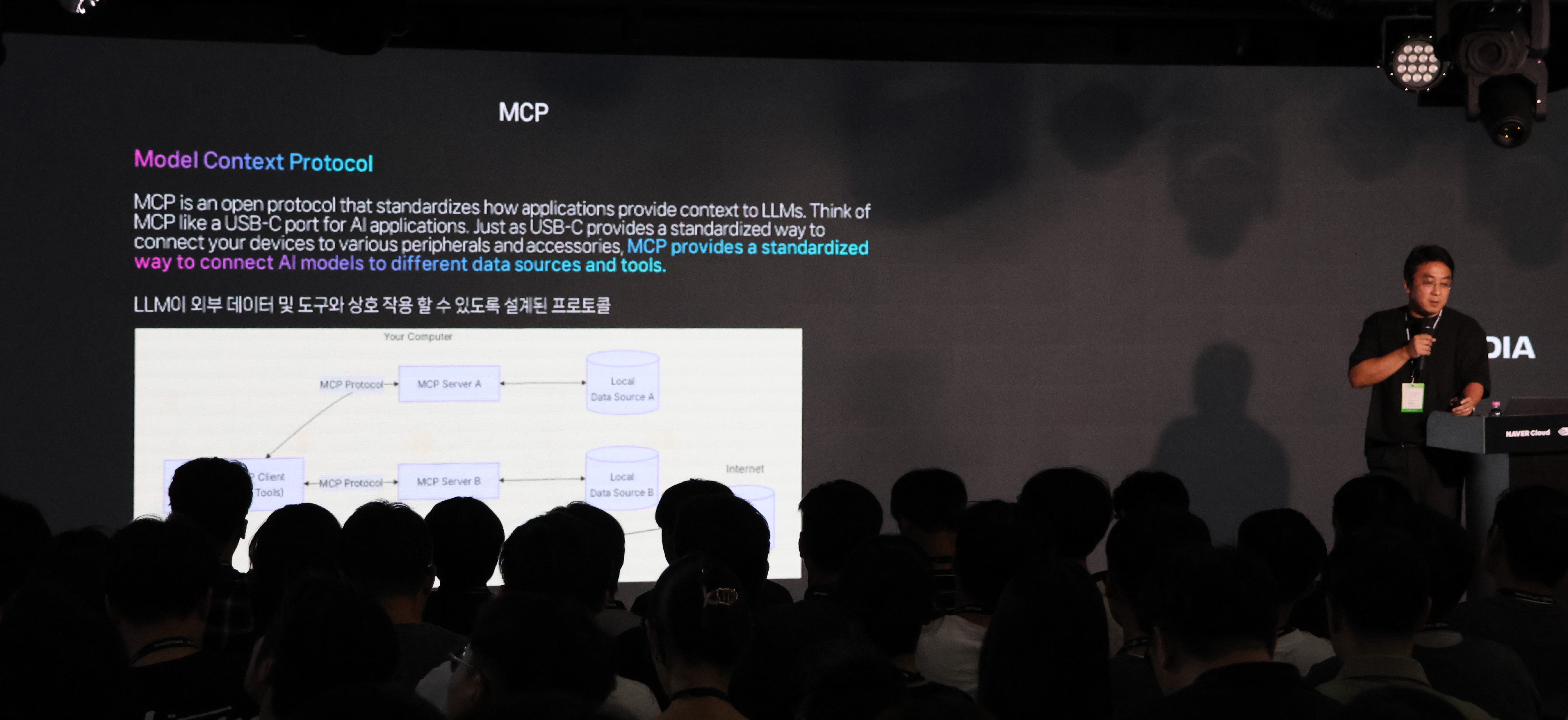

Agentic AI systems no longer work in isolation. Modern LLMs must connect to external tools, data sources, and systems to function as true agents. Model Context Protocol (MCP) exemplifies this need for interoperability.

MCP is a protocol that seamlessly links various data sources and tools to LLMs. Traditionally, integrations required significant resources due to differing API formats. MCP makes linking more flexible by abstracting the interface differences between tools and models.

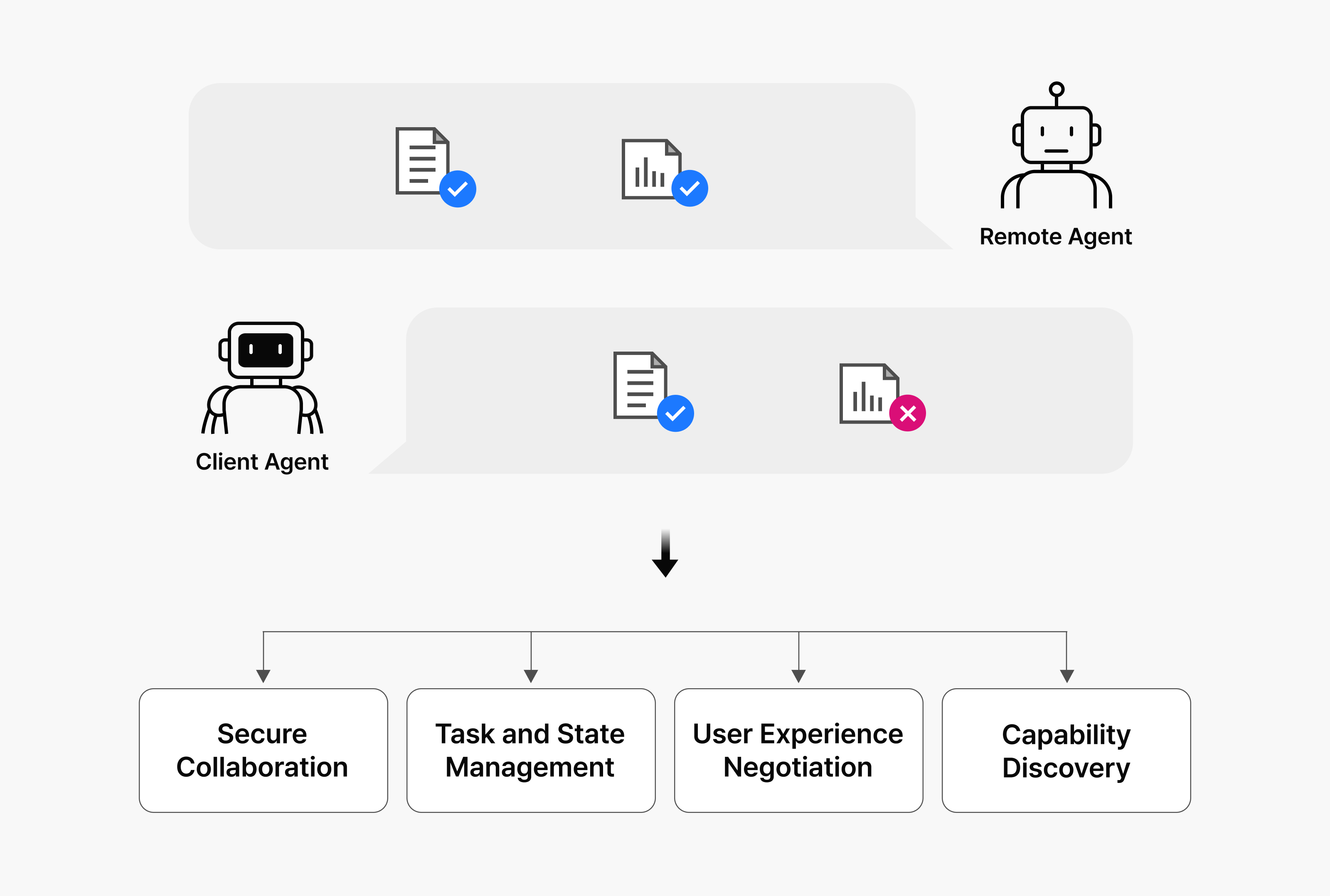

This represents a global trend. Google recently announced Agent2Agent (A2A) protocol with its technology partners, creating a multi-agent ecosystem where domain-specialized agents communicate and collaborate. AI agents no longer work independently—the crucial question now is how to design AI systems that work together effectively.

Agent2Agent (A2A) protocol

How can we maximize AI agent potential?

Three key factors are essential for properly utilizing AI agents:

- Set clear goals.

Don’t just say “let’s use AI.” We should ask ourselves: Why are we introducing this agent? What specific problem are we solving? Setting concrete goals and expectations upfront is critical. - Experiment and evaluate continuously.

The generative AI ecosystem changes daily. It’s important to remain open to new frameworks, technologies, and methods—actively testing them and flexibly reviewing their impact on our systems. - Focus on application-level innovation.

We shouldn’t be satisfied with merely connecting good models. Instead, we must analyze issues from users’ perspectives, examining what value AI creates in real-world services and user experiences, and identifying new ways to solve existing problems. Once issues are identified, we must leverage AI to address them effectively.

Today, more than raw model performance, it’s important to consider how to connect models, what structures to implement them in, and what kind of experiences you want to create. LLMs are no longer merely technology—they’re tools for designing experiences.

In today’s AI-driven era, what kind of agents are you building?

Watch the full session: Exploring LLM trends and real-world applications

Building multi-agent AI architectures

Heo Changhyeon, NAVER Cloud Solution Architect

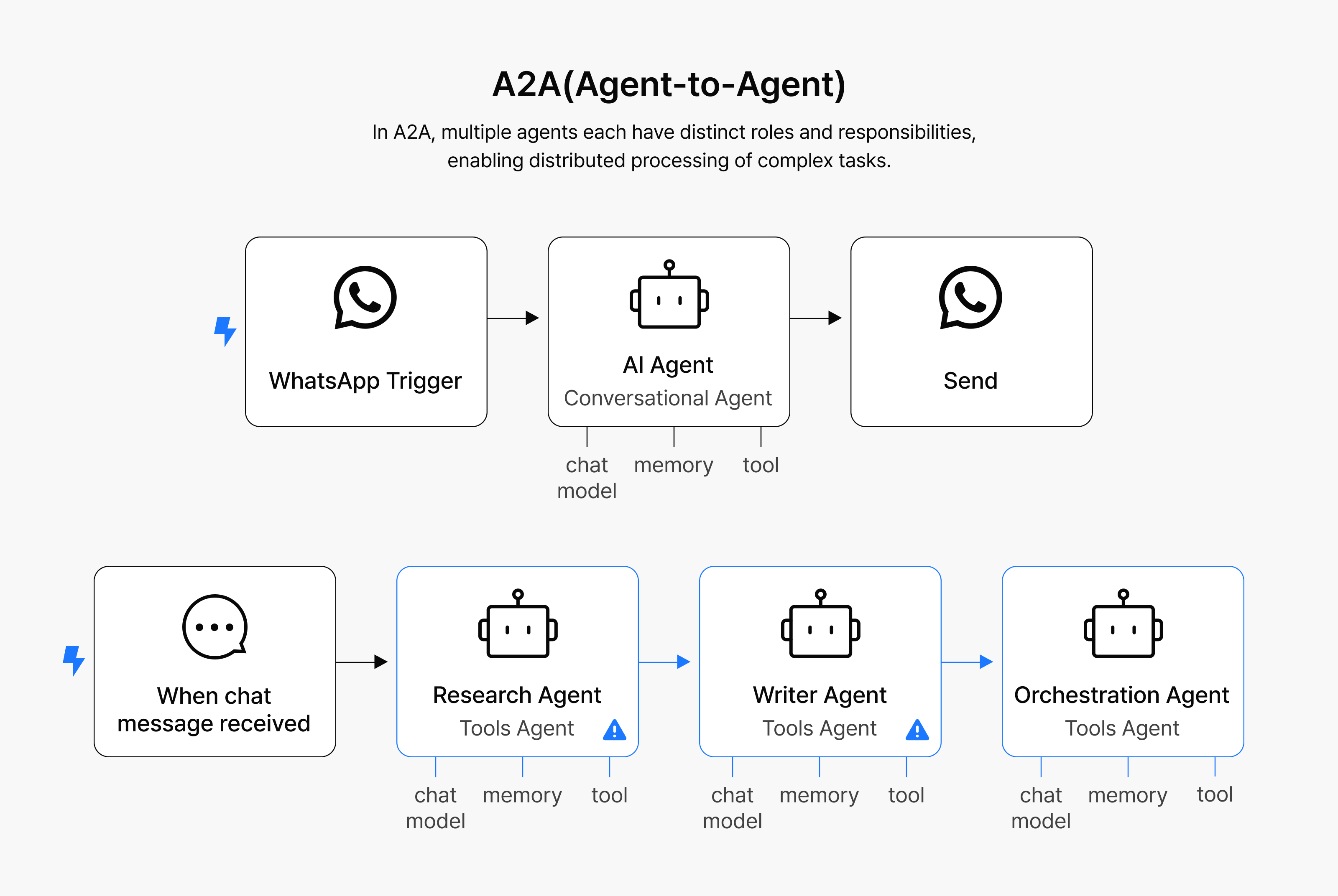

We’ve moved past the era of viewing AI as a single model. Today, multi-agent architectures are gaining attention, where multiple AI systems work together, each serving distinct purposes and roles. In this session, we explored why multi-agents are crucial, how to implement them, and the various frameworks and architectural strategies that support this technology.

Why do we need multi-agents?

A single agent cannot handle complex and sophisticated tasks effectively. Natural language to SQL (NL2SQL) technology, for example, frequently faces hallucination and accuracy issues.

Overcoming these limitations requires a structure where multiple agents with distinct roles collaborate incrementally. When performing NL2SQL tasks, for instance, you need the following specialized agents:

- Agent that extracts keywords

- Agent that validates SQL standards compliance

- Agent that verifies whether generated queries actually work

When agents with specialized roles divide the work and collaborate, we can increase both accuracy and reliability simultaneously. In our own implementation, we built a threat detection system based on log pattern analysis using a multi-agent structure where these agents worked together:

- Model that detects specific log patterns

- Model that analyzes detected error types in detail

- Model that guides users toward appropriate follow-up measures

These models functioned as one team, enabling faster and more accurate detection and response.

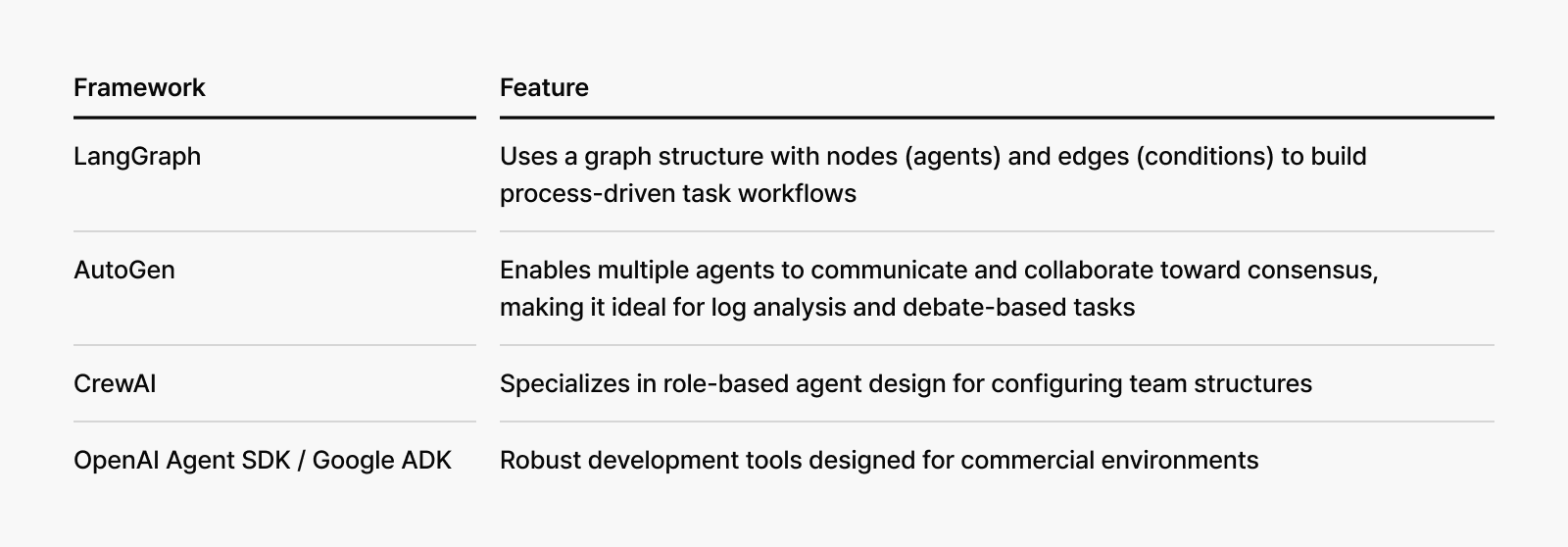

Which framework should we use?

To implement multi-agents, choosing the right framework is crucial. Based on what you’re trying to accomplish, you can flexibly combine any of the following tools:

Two popular architecture patterns

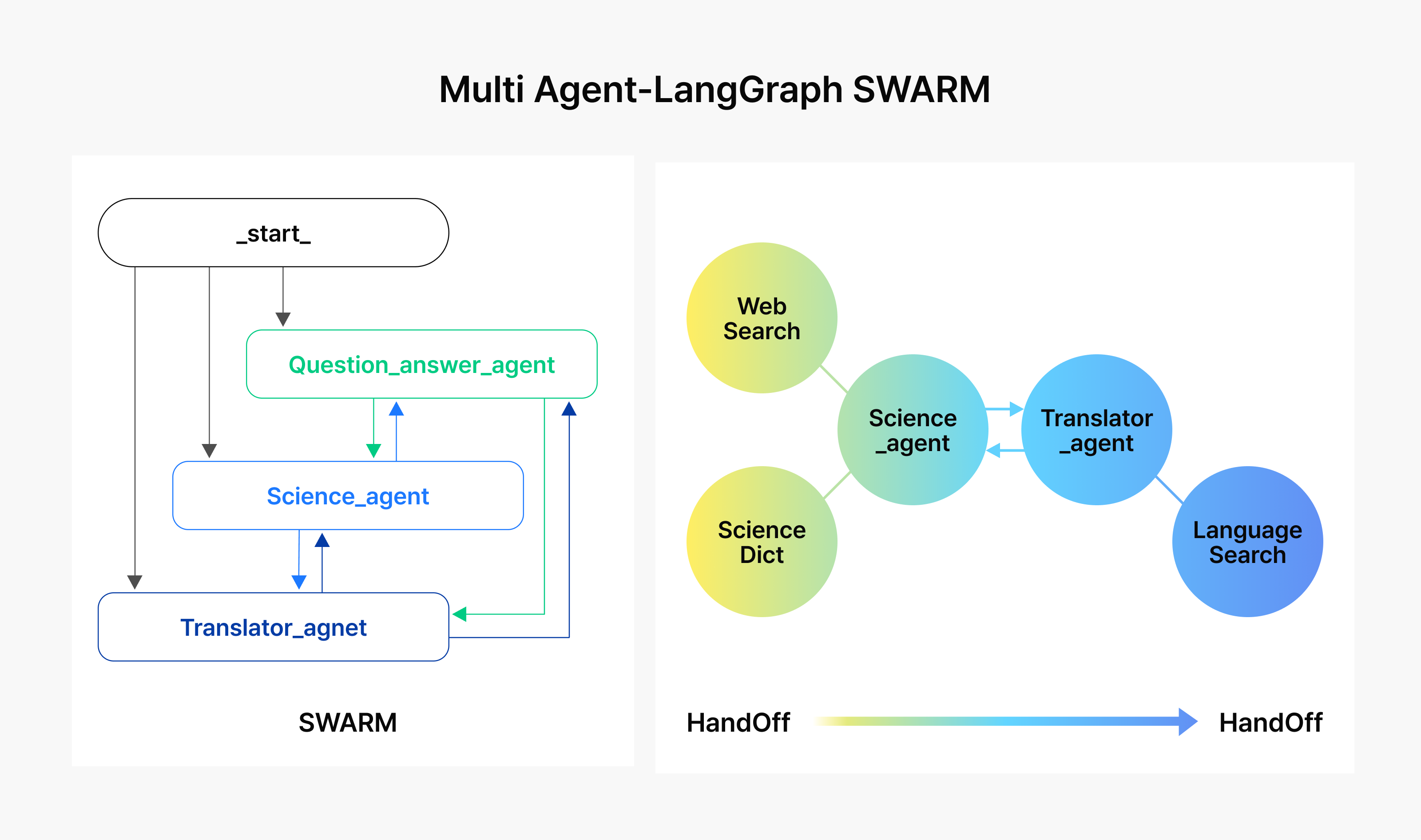

Configuring a multi-agent system requires the appropriate strategy. The most commonly used patterns are:

- Swarm pattern (decentralized)

- Agents use tool-calling and handoffs without central control

- Example: Search data → Translate → Answer question

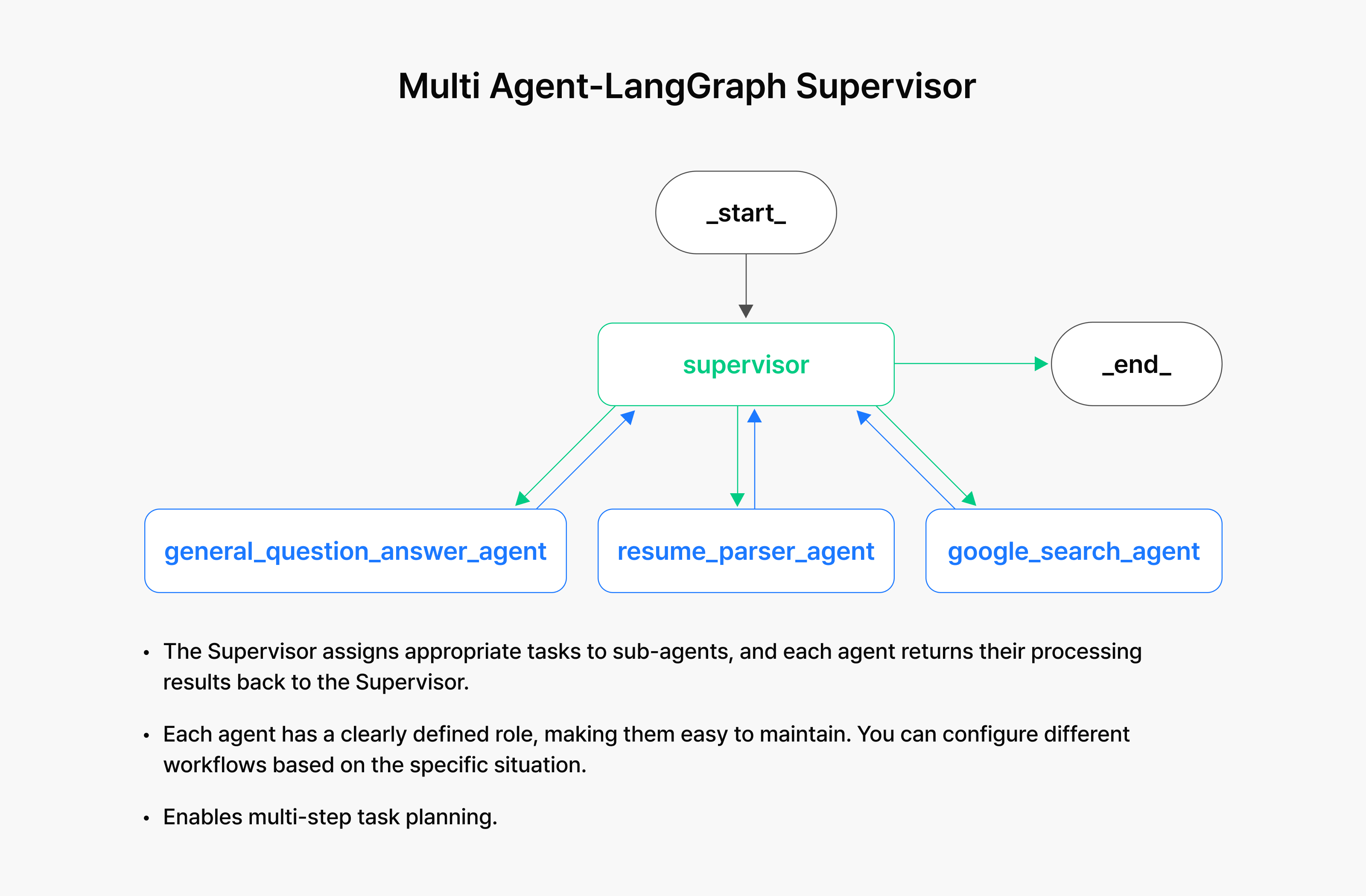

- Supervisor pattern (hierarchical)

- A supervisor agent orchestrates the sub-agents

- Example: One team collects data while another drafts summaries/reports

- The more complex a task, the more effective this pattern becomes compared to a single agent

How should we prepare for the multi-agent era?

To use multi-agent systems effectively, human capabilities are just as important as the tools themselves. Two key qualities we emphasize are:

- Understanding of models

Learn how LLMs like HyperCLOVA X work and experiment with techniques like supervised fine-tuning (SFT) yourself.

- Practical application of frameworks

Combine various open-source tools and SDKs to suit your purpose and apply them to actual systems.

Multi-agents are no longer a technology of the future. We’re already working with various AI tools, and our work productivity and service quality depend on how we design and connect them. Now is the time to extend beyond single models and think about creating collaborative AI structures.

Which agents are working together for your service?

Watch the full session: Building multi-agent AI architectures

Implementing AI Agents with MCP

Choi Jangho, NAVER Cloud Solution Architect

Introducing AI agents to real services extends far beyond creating capable LLMs. The real challenge lies in ensuring LLMs connect seamlessly to external tools for effective collaboration. In this session, we explored the key connector—Model Context Protocol (MCP)—through actual use cases and implementation strategies.

Evolution from LLM to RAG to AI agent

Initially, LLMs could only respond to users’ questions and generate text. The introduction of retrieval-augmented generation (RAG) marked a significant advancement, enabling models to incorporate real-time data into their responses. Today, we’ve reached a stage where AI agents can autonomously interact with external tools and services.

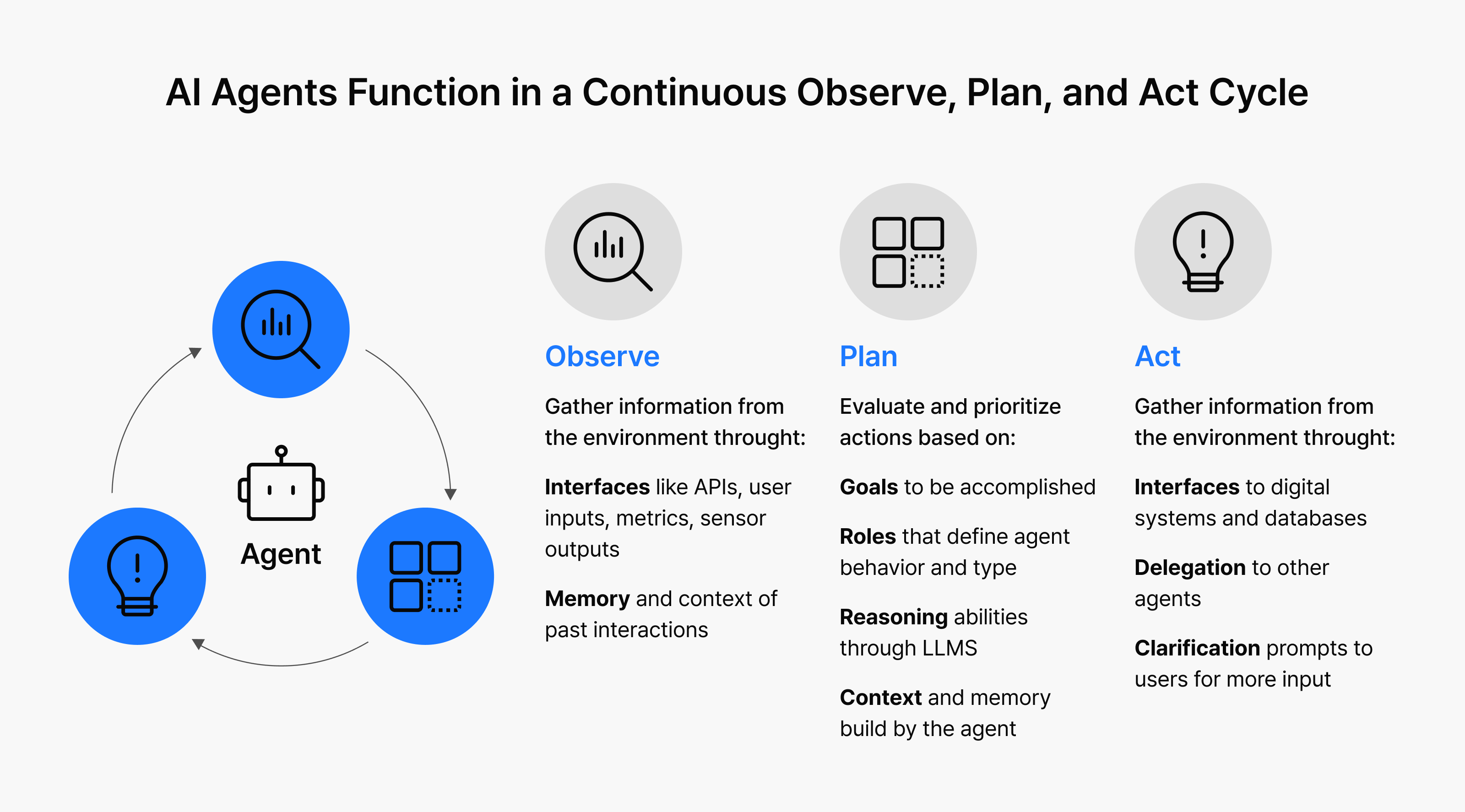

AI agents operate in a continuous cycle: Observe → Plan → Act

The critical factor is how effectively you can connect and call external tools, and whether the LLM can successfully orchestrate these interactions.

How AI agents work

Making tool connection simpler with Model Context Protocol (MCP)

While this works spectacularly in theory, connecting tools in the real world requires more effort than expected. For example, adding Gmail, Notion, GitHub, and Slack to an LLM-powered application requires implementing different logic for each API—a repetitive and inefficient process.

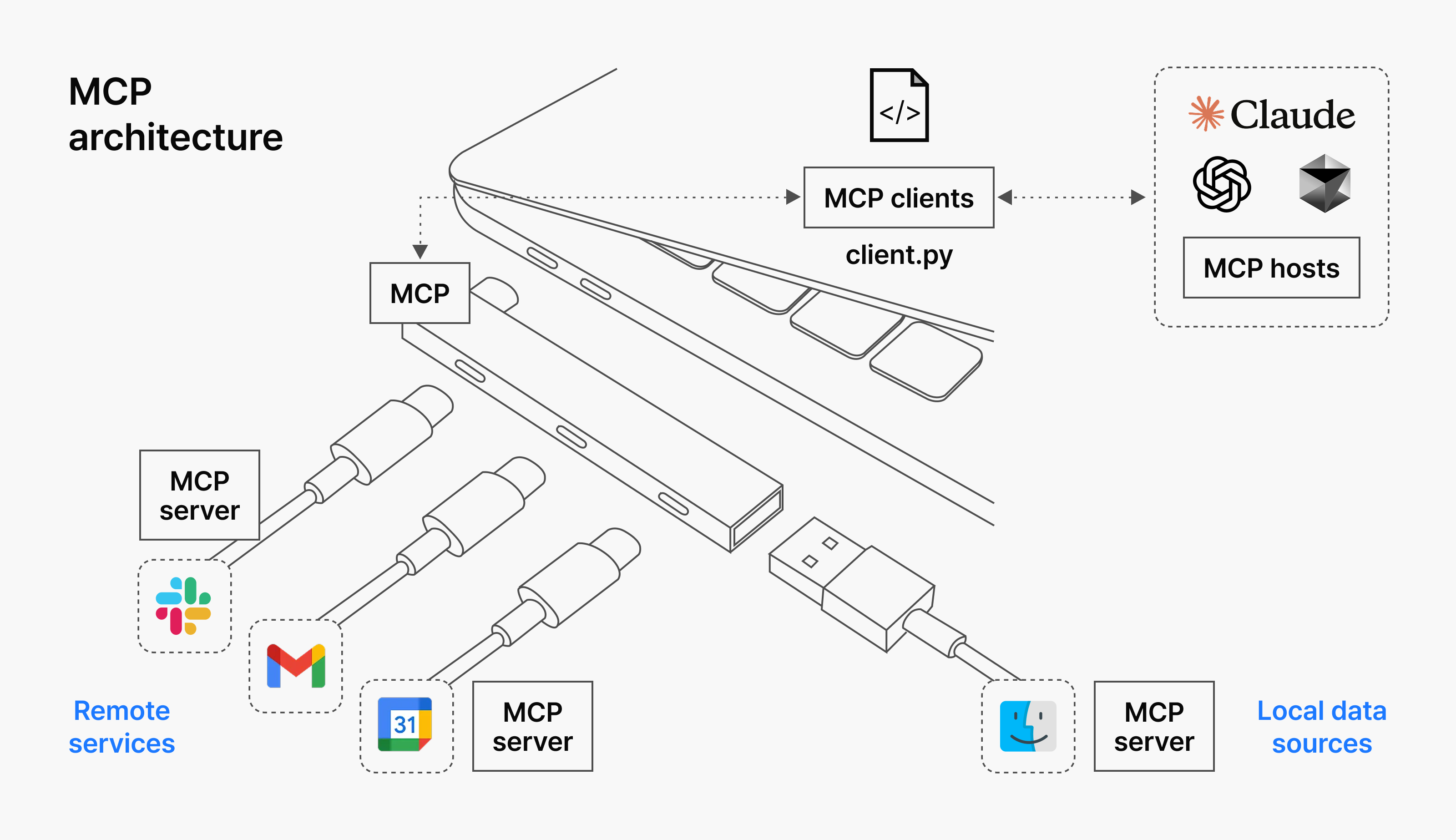

MCP emerged as a standard to address this exact problem. Proposed by Anthropic, MCP uses JSON-RPC as a communication standard between LLMs and external tools, dramatically simplifying integration:

- LLM (e.g., HyperCLOVA X, Claude, etc.) → MCP client

- External tool (e.g., Gmail, Notion, GitHub, etc.) → MCP server

With this standardization, you can immediately connect AI models to different tools, enabling much faster and more flexible feature additions or replacements.

MCP structure

NAVER Cloud’s MCP use cases

NAVER Cloud is actively working on various MCP-based projects. One practical example involved using MCP to implement a workflow that summarizes long videos and automatically organizes them in Notion. Watching Claude process everything autonomously with a single instruction—from extracting subtitles and summarizing comments to capturing screenshots and uploading to Notion—demonstrated the true power of AI agents.

MCP proves especially valuable in code management scenarios. You can create automation that analyzes GitHub issues, modifies relevant code, and processes commits automatically. Tools like Cursor AI make it straightforward to set up workflows for issue resolution.

One of the most frequent customer requests is adding real-time web search or internal data search capabilities to chatbots. Traditionally, implementing such features requires developing new APIs and configuring complex logic, which can be quite burdensome.

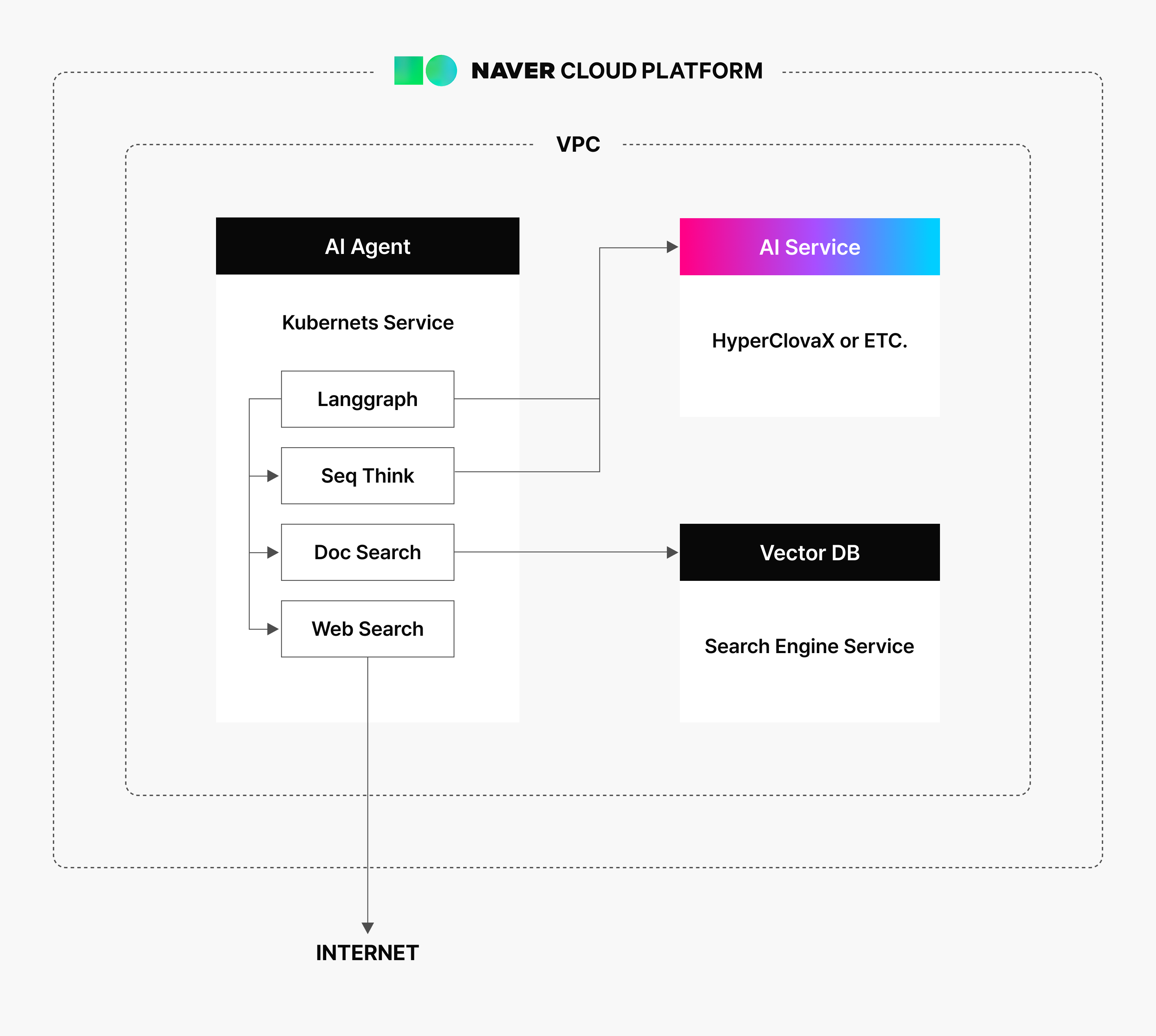

MCP transforms this process. You can add or replace tools using standardized methods for flexible configuration. For example, you can use LangGraph to design agent workflows, with the MCP client communicating internally with external MCP servers for web and document search.

NAVER Cloud Platform makes this structure easily achievable:

- LLM: Leverage HyperCLOVA X

- Vector database: Connect to NAVER Search DB or Pinecone

- Security: Configure servers as Docker containers locally

Connection equals competitiveness

With the rise of agentic AI, connection has become essential—not optional. MCP acts as a bridge between tool developers and LLMs, expanding agents’ possibilities exponentially.

For agentic AI to achieve widespread adoption, this standardization-based connection approach will be the essential. Tool developers can eliminate repetitive tasks while LLM developers can implement powerful workflows. If you’ve ever found tool connection daunting, now is the time to adopt MCP in your work.

Watch the full session: Implementing AI Agents with MCP

Creating conversational agents with HyperCLOVA X Audio

Choi Sanghyuk, NAVER Cloud researcher

“What would it be like to talk to AI like an actual person?”

Many of you might have imagined an AI we can talk to, like Samantha in the movie “Her” or Jarvis in “Iron Man.” At NAVER Cloud, we’re working to make that vision reality through HyperCLOVA X Audio, our audio LLM project.

In this session, we presented our efforts at creating an AI agent that goes beyond simple audio recognition technology to expressing and understanding emotions and tones, as well as the direction of its evolution.

What is an audio agent?

Text accounts for only 10 percent of our conversation. The other 90 percent is dominated by non-linguistic elements like intonation, speed, and emotions. Building a truly conversational AI takes more than understanding and saying the words—it requires understanding the situation and emotion as context and responding naturally.

At NAVER, we primarily focus on creating an audio agent that can:

- Communicate with emotion, intonation, and context in mind

- Respond in near real-time

- Actively participate in conversations without interruption

- Generate human-like voices with uniqueness

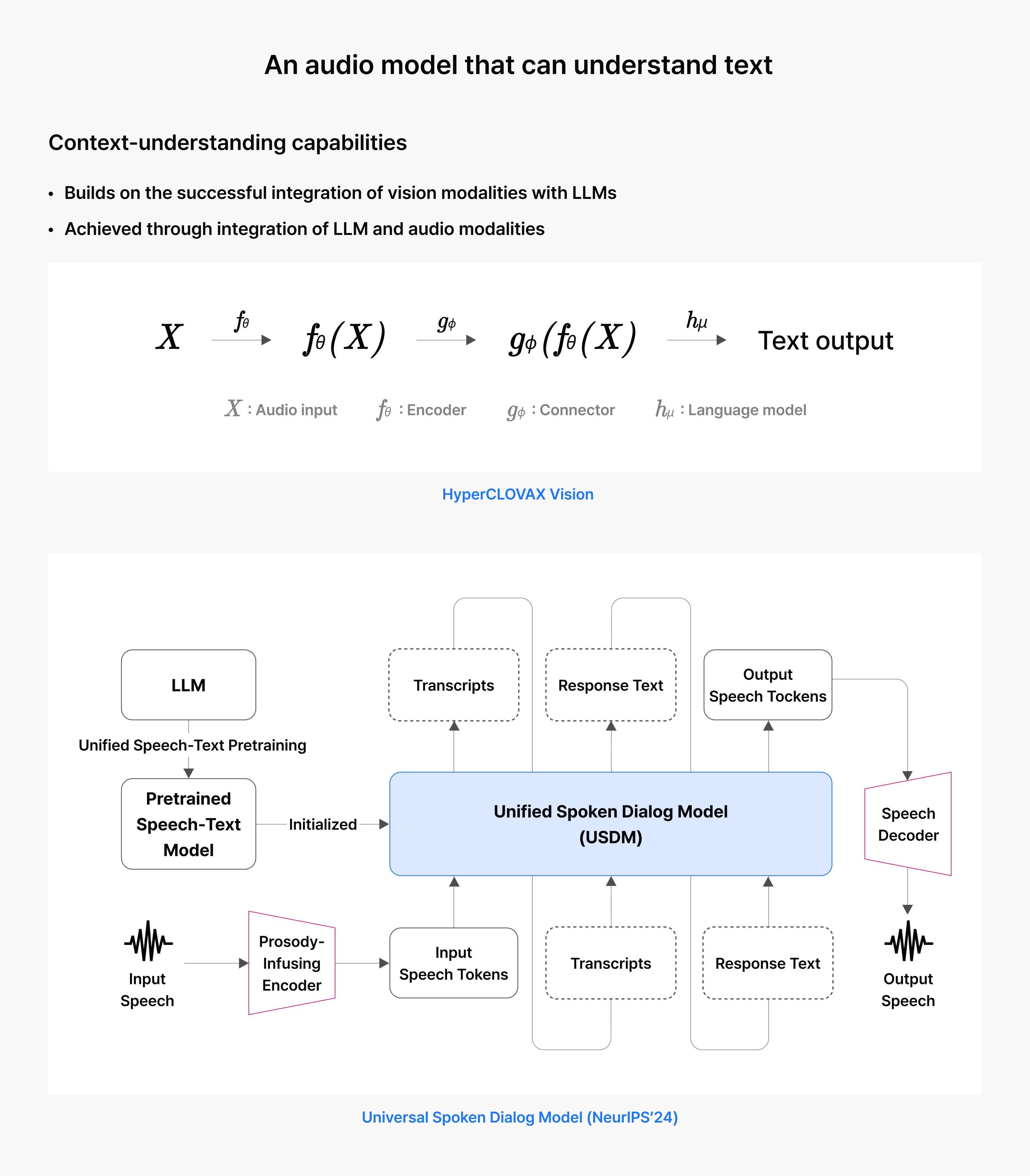

Limitations of traditional approaches and HyperCLOVA X Audio’s structure

Previously, audio AI used a three-step cascade structure: first converting speech-to-text (STT), then having the LLM generate a response, and finally using text-to-speech (TTS) to generate audio. This approach, however, failed to completely understand a person’s tone or emotion and respond naturally.

To address this problem, HyperCLOVA X Audio is changing the structure itself to create an AI that can respond more naturally and quickly. Our goals are:

- Cascade to natural processing

Goes beyond simple processing to understanding and expressing emotions and intonation.

- Near real-time response

Prepares responses while users are still speaking, achieving approximately 300-millisecond response times.

- Real-time plus general intelligence

Can participate naturally in dynamic conversations, including appropriate interruptions.

To realize these goals, HyperCLOVA X Audio adopted an integrated LLM structure that inputs audio directly and responds in audio as well. Unlike the previous approach where processing was carried out in steps, we’ve evolved the structure to process everything naturally at once.

HyperCLOVA X Audio operates through three key stages:

- Codec processing

Converts audio to a format that LLM can understand. For example, one second of audio gets converted to about 50 compressed tokens for processing. - Pre-training

Trains the audio-text relationship using large amounts of audio and text data to enhance its ability to predict what comes next. - Fine-tuning

Fine-tunes our model using high-quality data and develops a voice engine that implements human-like tone and voice.

While we currently use the traditional STT → LLM → TTS method for reliability, we’re developing an end-to-end structure where AI can understand and generate audio directly.

The demo below illustrates how AI reads aloud a PDF document naturally in a podcast format, using fine-tuned voices of real people.

Watch the PodcastLM demo: How AI creates personalized podcasts

Working toward a full-duplex audio LLM

Our ultimate goal is to build a full-duplex audio LLM that can communicate in real time without turns. This natural-sounding AI model would prepare responses while listening to the user and deliver them immediately without conversation lulls.

The key to achieving this goal is integrating new modalities like audio and video into an LLM while preserving the advanced reasoning and articulation capabilities that are the strengths of existing LLMs.

At NAVER, we’re working to build a model that can solve complex mathematical problems while giving natural-sounding explanations of how it reached the answer. We’ll continue challenging ourselves to develop a truly conversational agent that breaks down barriers between AI and humans.

Watch the full session: Creating conversational agents with HyperCLOVA X Audio